The Future of Data Quality and Data Observability with Agentic AI

Raj Joseph

Founder & CEO, DQLabs

Raj Joseph

Founder & CEO, DQLabs

Ensure Effective Data Quality Management in Apache Airflow

Overview

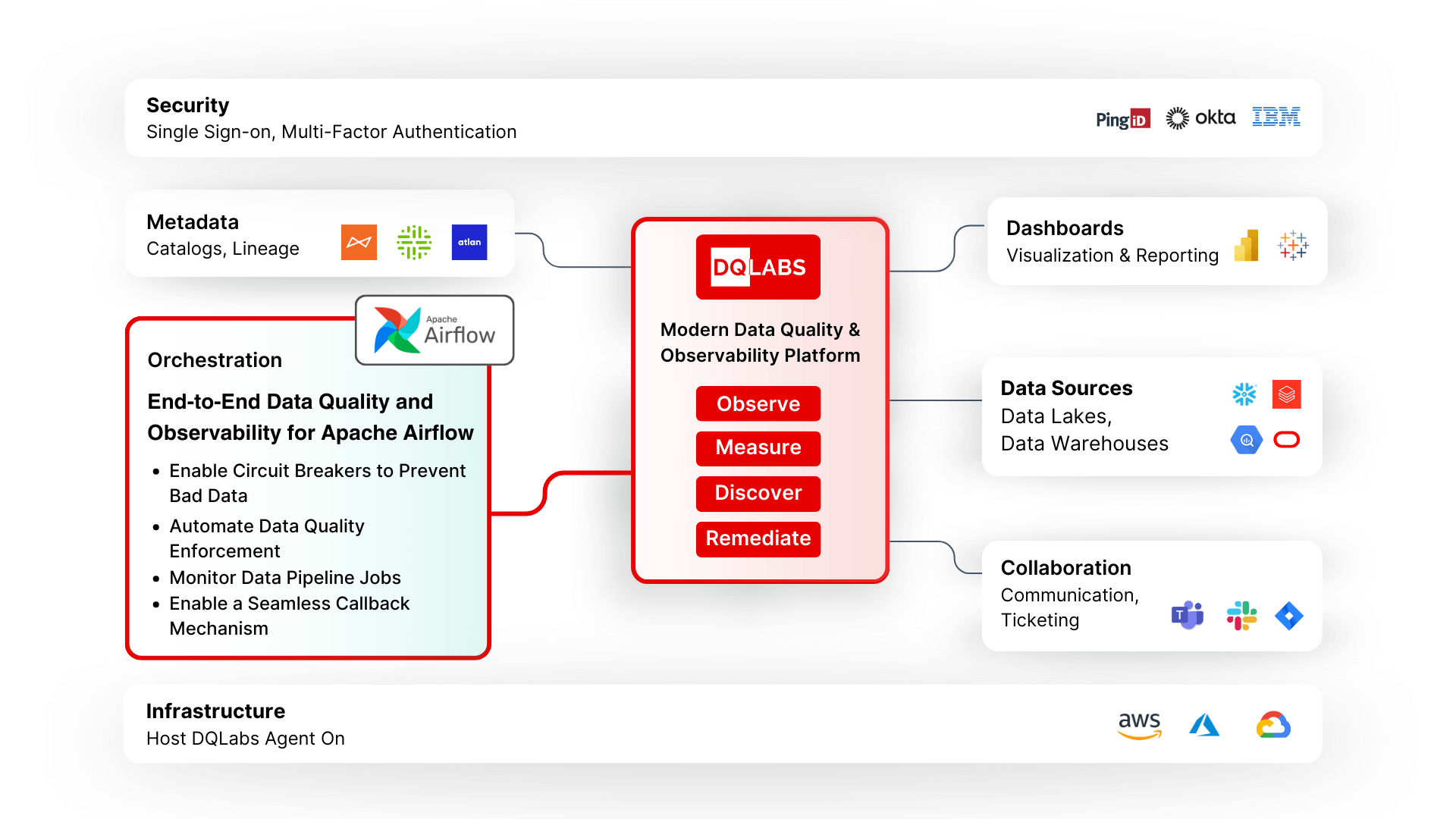

Apache Airflow is an open-source framework for managing workflows in data engineering. Airflow allows users to programmatically create, schedule, and monitor their workflows through the Airflow user interface. Directed acyclic graphs (DAGs) are used by Airflow to control workflow orchestration. Python is used to specify tasks and dependencies, and Airflow is used to handle scheduling and execution. DAGs can operate depending on external event triggers or on a predetermined schedule (hourly, daily, etc.).

Connecting Airflow in DQLabs allows the users to monitor all jobs in the pipeline. Integrating DQLabs with Apache Airflow can significantly improve the efficiency of your data pipeline by ensuring that data is trustworthy throughout the entire ETL (Extract, Transform, Load) process. Organizations can execute data quality assessments at any point in your Airflow data pipelines. These quality checks help you identify potential issues early, monitor the health of your pipelines, isolate faulty data, and stop it from spreading.

Data Quality and Observability for Apache Airflow

Circuit breaker functionality allows users to define granular conditions based on the data quality score of individual assets. This includes specifying conditions such as connection name, database name, schema name, asset name/asset ID, data quality condition (DQScore threshold), etc. The circuit breaker can be activated when a specified condition on the DQScore is met (e.g., if the DQScore drops below a certain threshold). With the integration, users can easily create and manage these conditions through Airflow, adding more control over the pipeline's data quality.