Ensure Effective Data Quality Management in ADLS

Overview

Azure Data Lake Storage (ADLS) is a highly scalable and secure data lake solution provided by Microsoft Azure. It is designed to handle large volumes of data, making it ideal for big data analytics and storage needs.

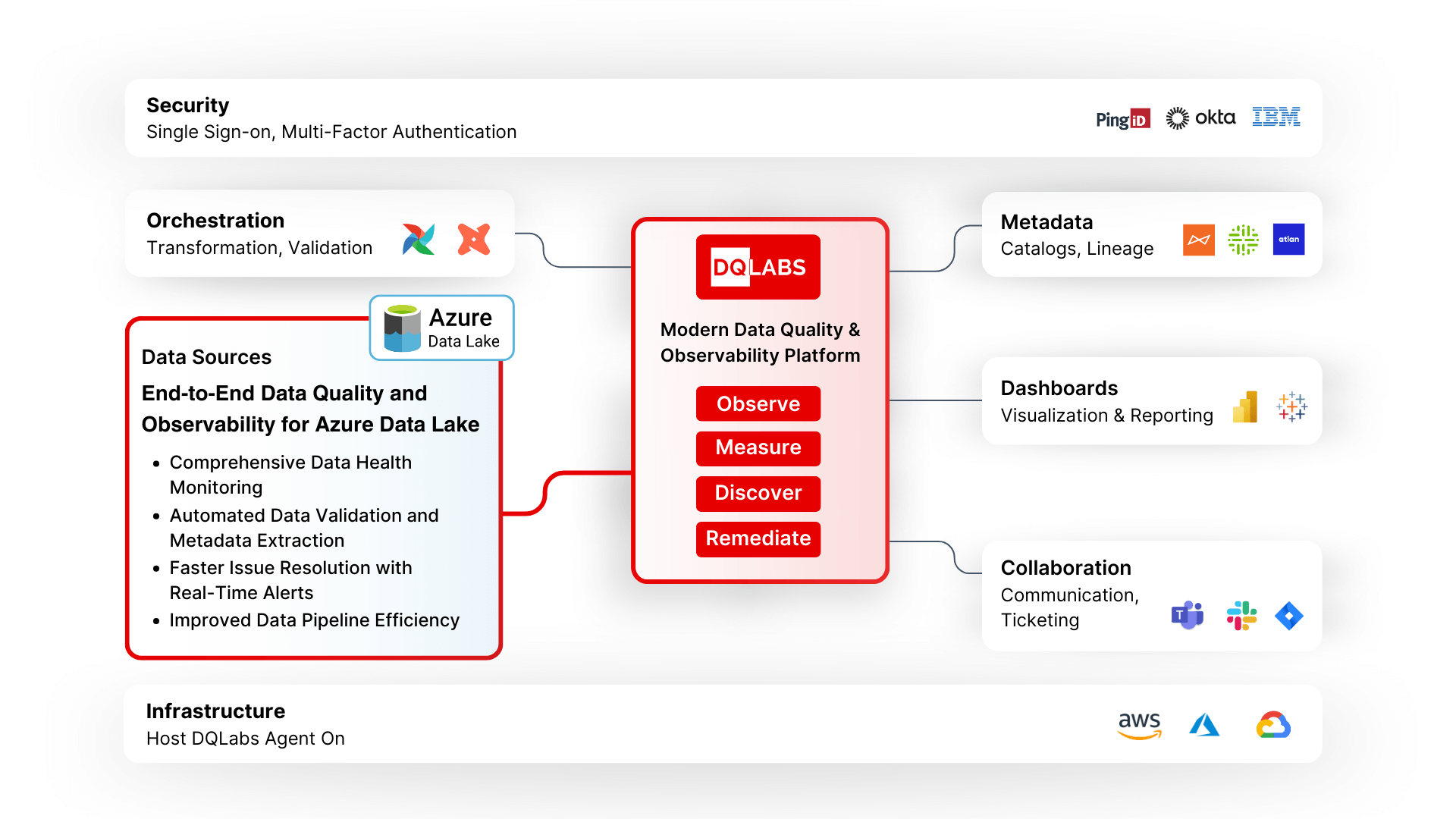

Integrating DQLabs with Azure Data Lake Storage (ADLS) provides organizations with an advanced, automated solution to monitor, manage, and ensure the health of their data lakes. By connecting DQLabs to ADLS, businesses can perform critical data quality checks, identify issues, and improve overall data governance, leading to more reliable, accessible, and actionable data.

Data Quality and Observability for ADLS

DQLabs enables the monitoring of foundational data health checks on files stored in Azure Data Lake Storage (ADLS). By leveraging Kusto clusters and creating external tables from ADLS blob files, DQLabs can run quality measures to assess the overall health of data in real time. Measures such as freshness, volume, and schema are automatically applied to detect anomalies and issues early.

The integration automates the execution of measures like freshness, volume, and schema, ensuring that data in ADLS meets predefined standards without manual intervention. For example, the freshness measure can ensure that data is updated at the expected frequency, while the volume measure ensures that datasets have the right number of records. The schema measure ensures that the data conforms to the expected format, helping detect structural issues early on.

DQLabs creates external tables on ADLS files (e.g., Parquet, JSON, CSV, AVRO) via Kusto clusters, allowing metadata to be extracted automatically during the execution of health measures. This metadata extraction simplifies the process of analyzing data stored in different formats by consolidating the relevant information for quality assessment. Once the data health measures are executed, DQLabs cleans up by dropping the external table, ensuring minimal impact on performance and storage usage in Azure.

DQLabs helps identify issues such as missing, incomplete, or out-of-date data in ADLS, enabling teams to take corrective actions before poor-quality data affects analytics or reporting. By running automated checks like freshness and schema validation on the data, organizations can ensure that only accurate, high-quality data is used for decision-making.

By using DQLabs to validate data directly in ADLS, organizations can catch issues early in the pipeline before they affect downstream systems or processes. This helps to reduce the need for manual interventions or rework and ensures that data pipelines run smoothly and efficiently. With DQLabs performing the heavy lifting of data quality checks, teams can focus more on value-added tasks like data analytics and business strategy.

DQLabs is designed to scale with growing data volumes in ADLS. As the amount of data stored in Azure Data Lake grows, DQLabs can scale to perform checks on large datasets without requiring significant infrastructure changes. Whether handling structured data (CSV, AVRO) or semi-structured data (JSON, Parquet), the integration ensures that data quality management can keep pace with increasing data demands.

Automating data quality checks and metadata extraction through DQLabs reduces the need for manual data validation efforts, leading to significant cost savings in labor and operational overhead. By detecting and resolving data quality issues early in ADLS, businesses can avoid costly downstream impacts such as bad data entering analytics systems or requiring extensive remediation efforts later on.

The integration with DQLabs provides real-time data observability, allowing stakeholders to track data quality and health continuously. This holistic view of data quality across Azure Data Lake Storage helps organizations stay on top of data health, ensuring that teams can act quickly on any issues that arise. DQLabs also provides transparent reports and dashboards that make it easy to monitor data quality trends, track historical issues, and gain insights into data quality performance over time.