Data is increasingly becoming the driving force of every organization in the modern world. Organizations want to leverage their data landscape to ensure that data-driven decision-making is a core part of their business operations. For this to happen, organizations need robust data quality management practices to increase trust in their data landscape.

To quote an analogy – if you use low-quality fuel in your vehicle, it can reduce the performance of your car, and in some cases, this can seriously damage the vehicle. The implications of your organization making decisions based on low-quality data can be similarly catastrophic. According to a statistic published by Gartner in the year 2021, poor data quality costs organizations an average of $12.9 million1.

What you will learn

- TL;DR: Why Data Quality Management is Crucial for Organizational Success

- Poor data quality costs millions, hindering effective business decisions.

- Data Quality Management (DQM) ensures data is reliable, accurate, and fit for purpose.

- Key DQM components include profiling, cleansing, standardization, validation, governance, and automation.

- Six core dimensions define data quality: accuracy, completeness, consistency, uniqueness, validity, and timeliness.

- DQM is an ongoing cycle that requires continuous improvement and robust frameworks.

- Effective DQM drives data-driven decisions, boosts productivity, strengthens competitive advantage, improves financial health, and ensures compliance.

In order to mitigate such risks, organizations need to follow data quality management practices.

This ensures that their business decisions are driven by trustworthy, high-quality data. In this blog, we will understand key data quality dimensions, and how organizations should plan for robust data quality management practices. But, first, let’s start with the definition of data quality.

Data quality is defined as the reliability of data, characterized by the ability of data to serve its intended purpose. Data is considered to be of high quality if it is accurate, complete, unique, valid, fresh, and consistent.

What is Data Quality Management?

Data quality management is a set of practices, tools, and capabilities that ensure the delivery of accurate, complete, and fresh data. A good data quality management system includes a variety of features and capabilities to ensure data quality and the trustworthiness of organizational data.

These capabilities enable organizations to identify and resolve data quality issues, ensuring the delivery of high-quality, trusted data to end users. Below are key components of an effective data quality management system:

Data Profiling

Data profiling is the process of exploring and analyzing data structure, content, data types, relationships between datasets, and data quality issues. It allows users to identify basic quality problems such as missing values, outliers, and inconsistencies.

Having a comprehensive profile of your data assets is a critical first step in assessing data quality. The insights gathered through data profiling also play a crucial role in guiding the data cleansing process.

Data Cleansing

While data profiling provides an overview of data health, data cleansing focuses on correcting discrepancies. This includes fixing unknown data types, removing duplicate records, and addressing substandard data representations.

By ensuring data follows the defined format and standard, data cleansing supports smoother data integration efforts and helps maintain data integrity. Accurate and complete data is essential for effective, data-driven decision-making.

Data Standardization

Data standardization ensures that data follows a common terminology and remains consistent across different systems and applications. This is especially important when data is shared across multiple teams.

Standardized data reduces integration challenges, improves consistency, and helps maintain data integrity. It also ensures that all datasets follow the same structure, facilitating better collaboration and trust in the data.

Data Validation

Data validation is a critical component of data quality management. It involves applying rules and checks to verify that datasets meet required standards and criteria.

For example, in a clinical study where participants are all women aged 30-60 years, a data validation rule might specify: Gender: Female, Age: 30-60 years. Any data outside these parameters would be flagged as invalid. This process ensures that only accurate and relevant data is used.

Data Governance

Data governance establishes policies, procedures, and standards to manage and maintain data quality across an organization. It ensures consistency in data definitions, formats, and usage.

A strong governance framework also promotes accountability by assigning data stewardship roles. This ensures that data quality is continuously monitored, maintained, and improved over time.

Automated Data Quality and Observability

In today’s complex data environments, detecting and troubleshooting data quality issues manually is challenging. Automated data quality tools are essential for organizations committed to delivering high-quality data.

These modern data quality tools provide real-time monitoring, troubleshooting, and automated business quality checks, ensuring consistent data delivery. They also enable organizations to proactively monitor data pipelines and model performance, reducing the financial impact of poor data quality.

Key Dimensions of Data Quality Management

Data quality dimensions provide a good benchmark to assess an organization’s data landscape. Data that is being assessed on multiple dimensions provides a clear picture of organizations’ data quality practices. The overall data quality score that is measured on different dimensions makes it easier for data users to assess whether the data is fit for its intended purpose or not. Overall there are 6 key dimensions of good data quality. Let’s explore each dimension in detail.

Accuracy

Data accuracy refers to the degree to which the said data accurately reflects an event or object that is described. There are two kinds of accuracy, semantic and syntactic.

Semantic accuracy refers to the correctness of the meaning or interpretation of data. Let’s say a database table has a column for product categories. In this table, a product is incorrectly categorized as “Electronics” when it actually belongs to the “Sports” product category. This is an example of semantic inaccuracy.

Syntactic accuracy focuses on the correct data format, structure or pattern. For example, if a customer’s credit card number is supposed to be 16 digits long, the syntactic data quality will check whether each data entry contains 16 digits or not.

Completeness

Data is considered to be complete when it fulfills certain expectations of comprehensiveness set by an organization. It indicates whether there are enough attributes of data to draw meaningful conclusions from it. In a customer database for an e-commerce platform, the “phone number” field is crucial for customer communication and order processing. Data completeness in this context means that every customer record should include a valid phone number. Null values in the “phone number” field indicate incomplete customer profiles, which could negatively affect order updates, delivery confirmations, and customer support queries.

Consistency

Data consistency ensures that data values obtained from different and independent datasets or different data sources do not contradict each other. With every growing data source, it’s very crucial for organizations to make sure that the data, integrated from different sources, is standardized for data consumption.

Uniqueness

Data uniqueness ensures that data records are distinct and don’t contain any duplicates. For example, in the student database for a university, every student should have a unique ID. This ensures the efficient management of accurate records for enrollment, grades, academic, and medical history.

Validity

Data validity refers to whether data conforms to defined rules, ranges, types, and specific formats or standards. For example, dates should be in specific formats (such as MM/DD/YYYY), the age column of a database should not contain negative values, the temperature of a city can’t be 80 degrees Celsius, etc.

Timeliness

Timeliness indicates how fresh the data is. Data timeliness ensures that data is fresh and up-to-date and it is available when needed. For industries like finance, timeliness is very important, as the stock price changes in real-time.

Data Quality Activities and Lifecycle

Effective data quality management involves a continuous cycle of activities designed to ensure data accuracy, consistency, and reliability. This lifecycle encompasses key processes that organizations follow to maintain high data quality standards throughout the data journey.

The core stages of the data quality lifecycle include:

Data Ingestion and Collection

Ensuring data is gathered from reliable sources and meets initial quality checks before entering the system.

Data Profiling and Assessment

Analyzing data to identify anomalies, inconsistencies, and patterns to assess overall data health.

Data Cleansing and Standardization

Correcting errors, removing duplicates, and aligning data with predefined standards to improve consistency.

Data Validation and Monitoring

Applying validation rules and continuously monitoring data for accuracy, completeness, and integrity.

Data Governance and Compliance

Implementing governance frameworks to define data policies, ensure compliance, and establish accountability for data owners.

Ongoing Quality Improvement

Leveraging automated observability tools to track, analyze, and enhance data quality over time.

Data Quality Management Framework and Best Practices

A robust Data Quality Management (DQM) framework is essential for ensuring that data is accurate, reliable, and actionable across its lifecycle. This framework provides a systematic approach to maintaining data integrity and consistency while aligning with business goals.

Key Components of a Data Quality Management Framework

Data Governance Structure

Establishing clear policies, roles, and responsibilities to oversee data quality initiatives.

Data Quality Metrics

Defining measurable standards like accuracy, completeness, and consistency to assess data quality.

Automated Monitoring

Implementing automated systems to track data quality in real-time, ensuring proactive issue detection and resolution.

Feedback Loops

Incorporating user feedback to improve data accuracy and align with evolving business needs.

Compliance Framework

Ensuring adherence to industry regulations and data governance policies to maintain data integrity.

Best Practices for Effective Data Quality Management

Establish Clear Data Ownership

Assign responsibility to specific teams or individuals for maintaining data accuracy and resolving issues.

Implement Automated Quality Checks

Use automated tools to detect anomalies, validate data, and ensure continuous monitoring.

Regular Data Audits

Conduct periodic data audits to identify gaps and ensure compliance with data standards.

Standardize Data Processes

Create uniform processes for data entry, transformation, and validation to minimize inconsistencies.

Foster Cross-Department Collaboration

Ensure alignment between data producers and consumers to maintain consistency across the organization.

A well-structured data quality management framework not only reduces errors but also enhances decision-making, regulatory compliance, and operational efficiency.

Data Quality Management Process

The Data Quality Management (DQM) process is a structured approach to ensure data remains accurate, consistent, and reliable throughout its lifecycle. It involves a series of well-defined steps that help organizations identify, assess, and resolve data quality issues effectively.

Data Assessment and Profiling

The first step involves analyzing the existing datasets to understand their structure, content, and quality. This includes identifying missing values, inconsistencies, duplicates, and other anomalies.

For instance, a financial institution might use data profiling to detect discrepancies or data errors in customer records, ensuring all entries meet regulatory standards.

Data Quality Issue Identification

After profiling, organizations must pinpoint specific data quality issues that impact business processes. These data validity issues could range from inaccurate customer information to inconsistent sales data across multiple systems.

By categorizing these issues—such as accuracy, completeness, and validity—organizations can prioritize what needs to be fixed first.

Data Cleansing and Standardization

Once issues are identified, the next step is to clean and standardize the data. This involves correcting errors, removing duplicates, and ensuring data adheres to organizational standards and industry regulations.

For example, standardizing customer addresses across systems helps maintain consistency and reduces errors during data integration.

Data Validation and Monitoring

To maintain long-term data quality, organizations implement data validation rules and continuous monitoring. This step ensures new data entering the system meets predefined criteria, while automated data monitoring also helps detect and resolve future issues in real time.

A healthcare provider, for instance, might use automated validation to ensure patient records align with demographic and clinical guidelines.

Data Governance and Documentation

Establishing a clear data governance framework ensures ongoing accountability for data quality. Organizations document data quality policies, responsibilities, and workflows to maintain consistent data practices across departments.

This governance framework also fosters collaboration between data engineers, analysts, and business leaders to align data quality efforts with organizational goals.

Continuous Improvement

Data quality management is an ongoing process. Organizations should regularly revisit their data quality frameworks to adapt to changing business needs, evolving technologies, and new regulatory requirements.

By fostering a culture of continuous improvement, businesses can maintain high-quality data and drive better decision-making over time.

Benefits of Effective Data Quality Management

Data-Driven Decision-Making

With effective data quality management practices, organizations can confidently make business decisions based on trustworthy and high-quality data. Data and business teams can trust organizations’ data landscape, which leads to increased data utilization and promotes data-driven decision-making.

Enhanced Productivity

Data quality management ensures that organizations are handling data quality issues in an automated and augmented manner to reduce manual handling of DQ errors. Data quality automation practices reduce the time taken to identify and resolve data quality issues. This means that employees can now spend less time dealing with data quality errors and can now focus on their core data management tasks.

Competitive Advantage

Reputation precedes every business. A business with a good reputation gains a higher competitive advantage over others. High-quality data plays an important role in ensuring that a business maintains a good reputation, while low-quality data has been proven to bring about distrust from customers, leading to their dissatisfaction with the business’s products and services.

Financial Health Improvement

At the start of the blog, we have seen that the implications of poor data quality can be disastrous. Data quality management ensures that organizations don’t have to deal with financial loss due to poor data quality.

Data Governance and Compliance

Data quality management practices, by ensuring high data quality, enable organizations to meet regulatory and compliance requirements. With effective governance and compliance through data quality management, organizations can avoid substantial penalties and severe damage to their brand value.

Efficient Business Processes

Reliable data ensures smoother and more efficient business processes across various departments. This includes faster reporting, streamlined workflows, and better collaboration between teams.

Conclusion

Ensuring high-quality data through effective data quality management practices is crucial for organizations aiming to make informed decisions and improve operational efficiency. By employing methods like data profiling, cleansing, standardization, validation, and automated data quality monitoring, companies can address data inaccuracies and inconsistencies proactively.

Data governance also plays a critical role by establishing clear guidelines and accountability for data management, ensuring data integrity and compliance with regulations. Ultimately, investing in data quality management practices not only enhances data reliability but also supports better business processes, regulatory compliance, and stakeholder trust. Organizations that prioritize data quality management are better positioned to capitalize on their data assets for strategic decision-making and sustained growth.

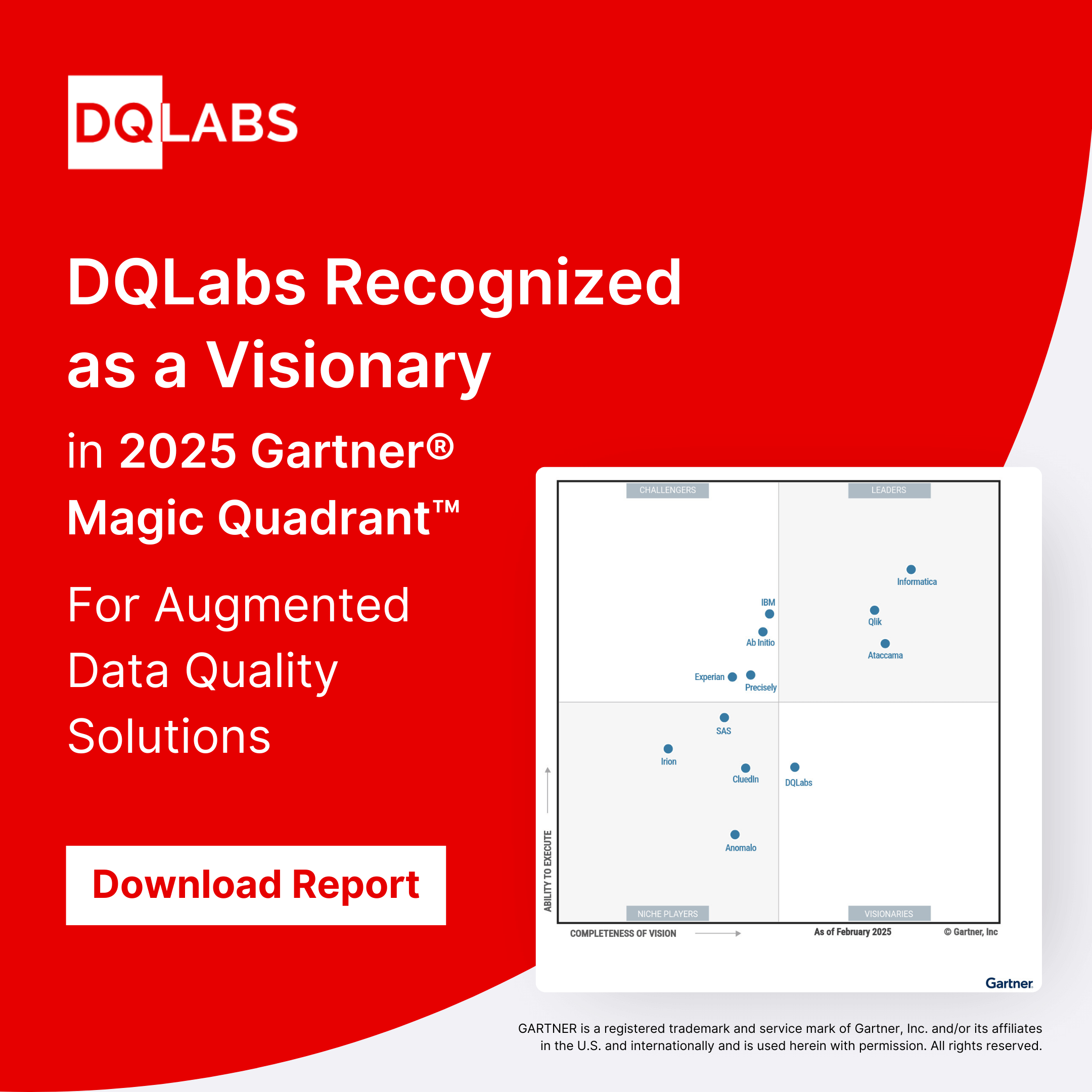

Organizations that want to implement robust data quality management practices should explore DQLabs, the modern data quality management platform. DQLabs will enable organizations to have a competitive advantage in today’s digital marketplace through Data Quality Management.

Source:1 https://www.gartner.com/smarterwithgartner/how-to-improve-your-data-quality/

FAQs

Can AI Improve Data Quality Management?

Yes, AI can significantly enhance data quality management by automating complex tasks and improving data accuracy in real-time. AI-powered systems can detect anomalies, identify patterns, and resolve inconsistencies faster than traditional methods.

For instance, machine learning algorithms can continuously monitor data pipelines to detect errors like missing values or schema changes and alert teams immediately. This proactive approach reduces manual intervention and ensures high-quality, accurate and reliable data for business decision-making.

What Industries Benefit the Most from Data Quality Management?

Several industries rely on robust data quality management to maintain operational efficiency, ensure compliance, and drive better outcomes:

- Healthcare: Accurate patient records are crucial for delivering quality care and meeting regulatory standards.

- Finance: Ensures accurate reporting, fraud detection, and compliance with financial regulations.

- Retail & E-commerce: Clean data helps track customer behavior, manage inventory, and optimize marketing.

- Manufacturing: Reliable data supports supply chain management and quality control.

- Telecommunications: Ensures accurate customer billing and better service delivery.

Can AI improve data quality management?

Yes, AI can enhance data quality management by automating data profiling, cleansing, and validation. It quickly identifies and corrects errors, ensuring accuracy, completeness, and consistency across systems.

AI also improves data tracking, detects issues early, and enables intelligent data matching and deduplication. This helps organizations maintain high-quality data, improve compliance, and make better decisions.