Summarize and analyze this article with

Artificial intelligence (AI) and machine learning (ML) are no longer futuristic ideas. They have long moved from research labs to the heart of modern industries. Large language models (LLMs) like ChatGPT, Bard, and others are powering every conversational and advanced analytics interface. These technologies are changing how we operate and solve problems.

What you will learn

- AI and ML rely on high-quality, well-managed data to produce accurate, reliable, and unbiased results.

- Poor data quality causes model failures, financial losses, extensive data cleaning, and trust issues in AI projects.

- Key AI/ML challenges include managing data bias, overfitting, computational demands, explainability, model drift, and integration hurdles.

- Effective data strategies involve clear collection protocols, precise annotation, thorough cleaning, and continuous quality assessment using advanced tools.

- Continuous monitoring and retraining in production are vital to maintain data relevance and ensure ongoing AI/ML model performance.

What forms the crux of this technological advancement is data—the foundation for how AI learns, predicts, and improves. But not all data is created equal. Poor quality data leads to unreliable predictions, biased results, and wasted investments in AI projects. High quality data, on the other hand, allows models to perform as intended, ensuring accurate, trustworthy outcomes. However, many organizations fail to prioritize improving the quality of the data feeding these systems. According to Gartner, 60% of organizations do not assess the financial impact of poor data quality.

To quote Andrew Ng, founder of DeepLearning.AI, “The data is food for AI.”

Just as humans need high quality food for good health, AI needs high quality data for good performance. But as the volume of data continues to grow, so do the challenges of managing and maintaining it. Questions around accuracy, consistency, completeness, and relevance are becoming harder to ignore. For those taking interest in generative AI—a field that is expected to create significant economic value globally—the ability to manage data quality is a critical factor in achieving results.

This blog details why data quality is important, the challenges organizations face in maintaining data quality, and the tools and strategies that can help ensure success of your AI/ML projects. For anyone building AI-driven solutions, understanding the role of data quality is the first step toward meaningful progress.

The Consequences of Using Low-Quality Data in AI/ML Projects

Low quality data impacts every stage of an AI model’s lifecycle. During training, noisy or incomplete datasets can mislead the model, resulting in poor performance and inaccurate predictions. These effects have direct business impact and can determine the success or failure of AI initiatives.

Here are 5 critical negative effects of poor data quality in ML development:

1. Model Failure in Production:

Despite high testing accuracy, models often fail in real-world scenarios when they encounter data different from their training sets, having learned surface patterns rather than true relationships.

2. Cascading Financial Impact:

The costs ripple throughout organizations, exemplified by Zillow’s millions of losses from ML trading models misled by poor data quality. This could also include both direct losses and ongoing costs of maintaining underperforming systems.

3. Data Preparation Bottleneck:

Data scientists spend 60-80% of their time on data cleaning rather than model development, causing significant project delays and missed opportunities. What should take weeks extends into months.

4. Technical Debt and Maintenance:

Poor data quality creates compounding technical debt, requiring complex systems of data cleaning, validation, and transformation that demand constant attention and resources. This becomes especially problematic at scale.

5. Accuracy and Trust Issues:

Without quality data, models produce unreliable predictions with unclear confidence levels, leading stakeholders to question the validity of ML initiatives and their practical business value.

Ultimately, data quality determines the reliability and usability of AI systems. Addressing these challenges is essential to build models that provide consistent, accurate, and actionable results.

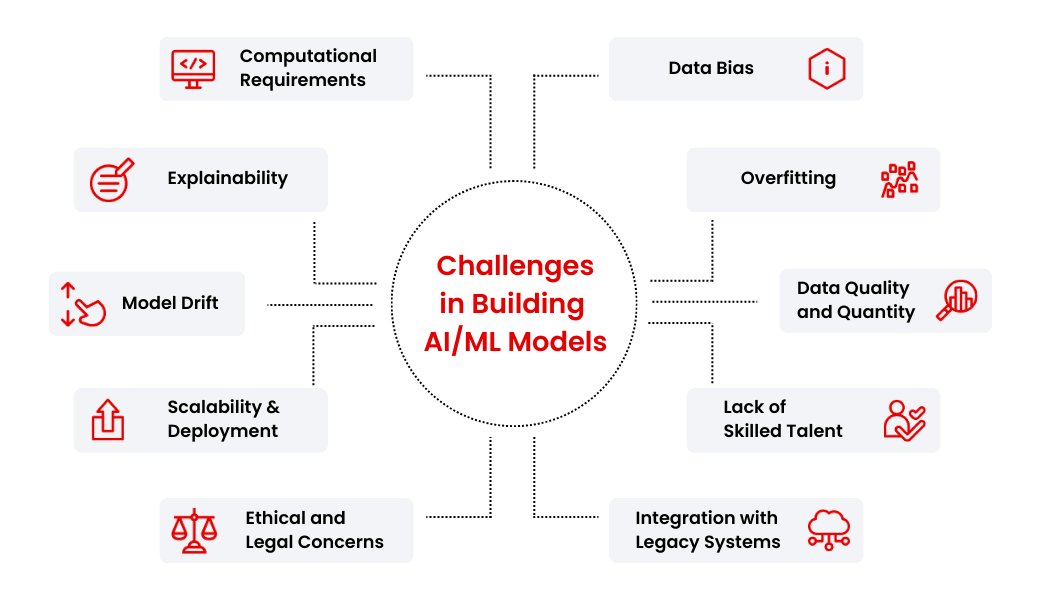

Challenges in Building AI/ML Models

Managing data from diverse sources introduces inconsistencies, errors, and missing entries that can disrupt training processes. For example, mismatched formats or incomplete records make it difficult for models to identify meaningful patterns. Additionally, data evolves rapidly—information that was correct yesterday may no longer reflect current conditions, particularly in fast-changing industries like finance or media.

The sheer scale of data used in AI presents another challenge too. As datasets grow larger, detecting and addressing errors becomes increasingly difficult. Even automated tools, while helpful, are not dependable and may miss nuanced issues or perpetuate existing biases.

Here are some of the major challenges faced while building AI/ML projects:

- Computational Requirements: Training complex models demands significant computational power and memory, alongside superior infrastructure for data storage and processing. Limited resources can set back your progress, though scalable cloud solutions can alleviate these constraints.

- Data Bias: Bias in datasets leads to unfair or inaccurate results. This can stem from unrepresentative data or collection methods. Addressing this requires diverse, representative datasets and preprocessing techniques like resampling or feature selection.

- Overfitting: Overfitting occurs when a model performs well on training data but poorly on unseen data. Techniques such as regularization, early stopping, and cross-validation can help mitigate this issue, ensuring better generalization.

- Explainability: Complex models like neural networks are often difficult to interpret. Improving transparency involves simpler models, techniques like feature importance, partial dependence plots can help us to understand the model predictions better.

- Model Drift: Changing data patterns over time reduces model performance. Addressing this requires continuous monitoring and retraining using updated data. Techniques like rolling windows and incremental learning ensure models adapt effectively to these changes.

- Data Quality and Quantity: The success of AI/ML projects heavily relies on high-quality and sufficient data. Incomplete, noisy, or unstructured data can lead to suboptimal models. To overcome this, data cleaning, augmentation, and synthetic data generation methods may be employed to enhance data reliability and volume, helping the model learn more effectively.

- Scalability and Deployment: Scaling AI/ML models from development to production can be challenging, particularly when models need to handle vast amounts of real-time data or operate in resource-constrained environments. Efficient deployment requires optimizing model performance, reducing inference time, and ensuring that the infrastructure can scale dynamically to accommodate changing loads.

- Lack of Skilled Talent: The complexity of AI/ML models and the need for interdisciplinary expertise can make it difficult to find qualified data scientists, machine learning engineers, and domain experts. Bridging the skills gap through continuous learning, partnerships with universities, or leveraging automated machine learning tools (AutoML) can help address this issue.

- Ethical and Legal Concerns: AI/ML projects, especially in fields like healthcare, finance, and law, raise ethical and legal questions around privacy, security, and accountability. Compliance with data protection laws (e.g., GDPR) and ethical AI practices, such as ensuring fairness and transparency, are critical for successful implementation and public trust.

- Integration with Legacy Systems: Many organizations face difficulties integrating AI/ML models into their existing infrastructure or legacy systems. Ensuring seamless interoperability and communication between new AI systems and older technologies is often complex and requires thoughtful planning, API development, and sometimes, system overhauls.

Best Practices for Maintaining Data Quality in AI/ML Initiatives

If you want your AI initiatives to succeed, start by feeding them quality data. How do we get there? Here are a few steps to help you ensure data quality in your AI/ML projects.

Data Collection and Annotation

A well-defined data collection strategy ensures that the dataset aligns with the goals of the machine learning project. This includes identifying reliable sources, setting clear data requirements, and establishing standardized protocols for collection. Automation can minimize errors and improve consistency, while also significantly saving your man hours.

For supervised learning tasks, data annotation is important. Clear guidelines for labeling, coupled with regular quality checks, prevent inconsistencies that could skew model predictions. Using advanced annotation tools and platforms can streamline this process and help maintain diverse and accurate datasets.

Cleaning and Preprocessing

Once collected, raw data often needs cleaning to address issues like missing values, outliers, and duplicates. Simple techniques such as mean/mode imputation or advanced methods like K-Nearest Neighbors can handle gaps found in your datasets effectively. Removing noise, deduplication and standardizing formats ensures datasets are ready for model ingestion with improved consistency. Outlier detection and treatment can also ensure no skewed data impacts the model.

Preprocessing includes scaling and normalization to align feature values and prevent certain attributes from disproportionately influencing model performance. Normalization refines raw data into formats that are more meaningful for model training and prepares data for algorithms sensitive to magnitude differences. By standardizing input data, they prevent certain features with large magnitudes from dominating the training process, which could distort model predictions.

Assessing Quality at Every Step

Continuous assessment of data quality is non-negotiable. Tools like DQLabs do real-time data quality checks, schema validation, and statistical distribution monitoring to ensure data aligns with expectations.

Proactively monitoring datasets for unexpected variations is also important for maintaining data integrity and reliability. Advanced anomaly detection algorithms and automated pipeline validation can further secure the data flow integrity. DQLabs uses a range of such advanced techniques, including both unsupervised and supervised learning methods. For example, algorithms like Isolation Forest, K-Nearest Neighbors (kNN), Support Vector Machines (SVM), DBSCAN, Elliptic Envelope, Local Outlier Factor (LOF), Z-score, and Boxplot offer diverse approaches to anomaly detection. Statistical techniques such as time-series anomaly detection complement these methods, enabling detailed analysis based on metrics like classification reports and confusion matrices.

During training, careful feature selection and focus on attributes that contribute the most to the target outcome reduces noise. Cross-validation techniques and continuous model monitoring catch and adapt to evolving data patterns, maintaining system reliability.

Monitoring in Production

Even after deployment, your mission to maintain data quality should continue. Because real-world environments are dynamic, and incoming data often shifts from the training set distribution. Monitoring for data drift and retraining models with updated datasets keeps predictions relevant and accurate.

Adopting these practices and focusing on key parameters at every stage enables organizations to maintain machine learning systems that are reliable, trustworthy, and effective.

Wrapping Up

By maintaining data quality in machine learning projects you can achieve reliable, accurate, and interpretable models. Effective data quality processes help organizations reduce costs, improve decision-making, and gain a competitive edge. As AI/ML evolves, the role of data quality becomes increasingly significant. Organizations that address data challenges proactively will be well-prepared to explore new possibilities in these areas and remain ahead in their fields.

Serve your AI/ML projects with clean data, let DQLabs show you how. Talk to our experts today!

ChatGPT

ChatGPT Perplexity

Perplexity Grok

Grok Google AI

Google AI