Maintaining data quality is essential to any web scraping or data integration project. Data-informed businesses rely on customer data. It helps their products, provides valuable insights, and drives new ideas. However, as a company expands its data collection, it becomes more vulnerable to data quality issues. Insufficient quality data, such as inaccurate, missing, or inconsistent data, provides a lousy foundation for decision making and can no longer be used to uphold arguments or support ideas based on that data. And once trust in the information is lost, the data, data team, and any data infrastructure loses their value as well.

ETL (Extract Transform Load) is extracting, transforming, and loading the data. It states what, when, and how data gets from your source sites to your readable database. On the other hand, data quality relies on implementing a system from the early stage of extraction to the final loading of your data into readable databases.

Data quality ETL procedure

Extract

Scheduling, maintaining, and monitoring are important aspects to ensure your data is up to date. You know what your information is at the extracting phase, and you should implement scripts that will look at its quality. This way, you give the system time to troubleshoot closer to the source itself, and you can take action before data goes to be transformed.

Transform

In transformation, this is when most of the quality checks are done. No matter what is used, it should perform the following:

- Data profiling: the data is looked at in terms of quality, volume format etc.

- Data cleansing and matching: related entries are merged while duplicates are removed

- Data enrichment: the usefulness of your data is increased by adding other relevant information.

- Data normalization and validation: the integrity of information is checked, and validation errors are managed.

Load

At this point, you know your data. It’s been changed to fit your needs and, if your quality check system is efficient, the data that reaches you is reliable. This way, you avoid overloading your database or data warehouse with unreliable or lousy quality data, and you ensure that the results have been validated.

What is high-quality data?

Accurate data

When data is accurate, what the information says happened. Accurate data correctly captures the real-world event that took place. What’s more, accuracy implies that data values are correct; you record what happened. Accuracy also means that the data is interpreted as intended in a consistent and unambiguous format. When this is done successfully, analysts can easily understand and work with the data, leading to more accurate insights based on that data.

Complete data

Simply put, complete data means there is no missing data. It also means that, to the extent possible, the data gives a complete picture of the “real-world” events that took place. Without comprehensive data, there is limited visibility into how customers behave on a website or app.

Relevancy

The data you collect should also be helpful for the campaigns and initiatives you plan to apply it for. Even if the information you get has all the other characteristics of quality data, it’s not helpful to you if it’s not relevant to your goals. It’s essential to set goals for your data collection to know what kind of data to collect.

Validity

This refers to how the data is collected rather than the information itself. Information is valid if it is suitable for the correct type and falls within the appropriate range. If data is not up to standard, you might get trouble organizing and analyzing it. Some software can help you change data to the correct format.

Timeliness

Timeliness refers to how recent the event the data represents took place. Generally, data should be recorded soon after the actual world event. Data typically becomes less valuable and less accurate as time goes on. Data that reflect events that happened recently are likely to show the reality. Using outdated data can lead to inaccurate results and taking actions that don’t remember the present fact.

Consistency

When comparing a data item or its counterpart across multiple data sets or databases, it should be the same. This lack of difference between various versions of a single data item is referred to as consistency. A data item should be consistent both in its content and its format. Different groups may be operating under different assumptions about what is true if your data isn’t consistent. This can mean that the various departments within your company will not be well-coordinated and may unknowingly work against one another.

Conclusion

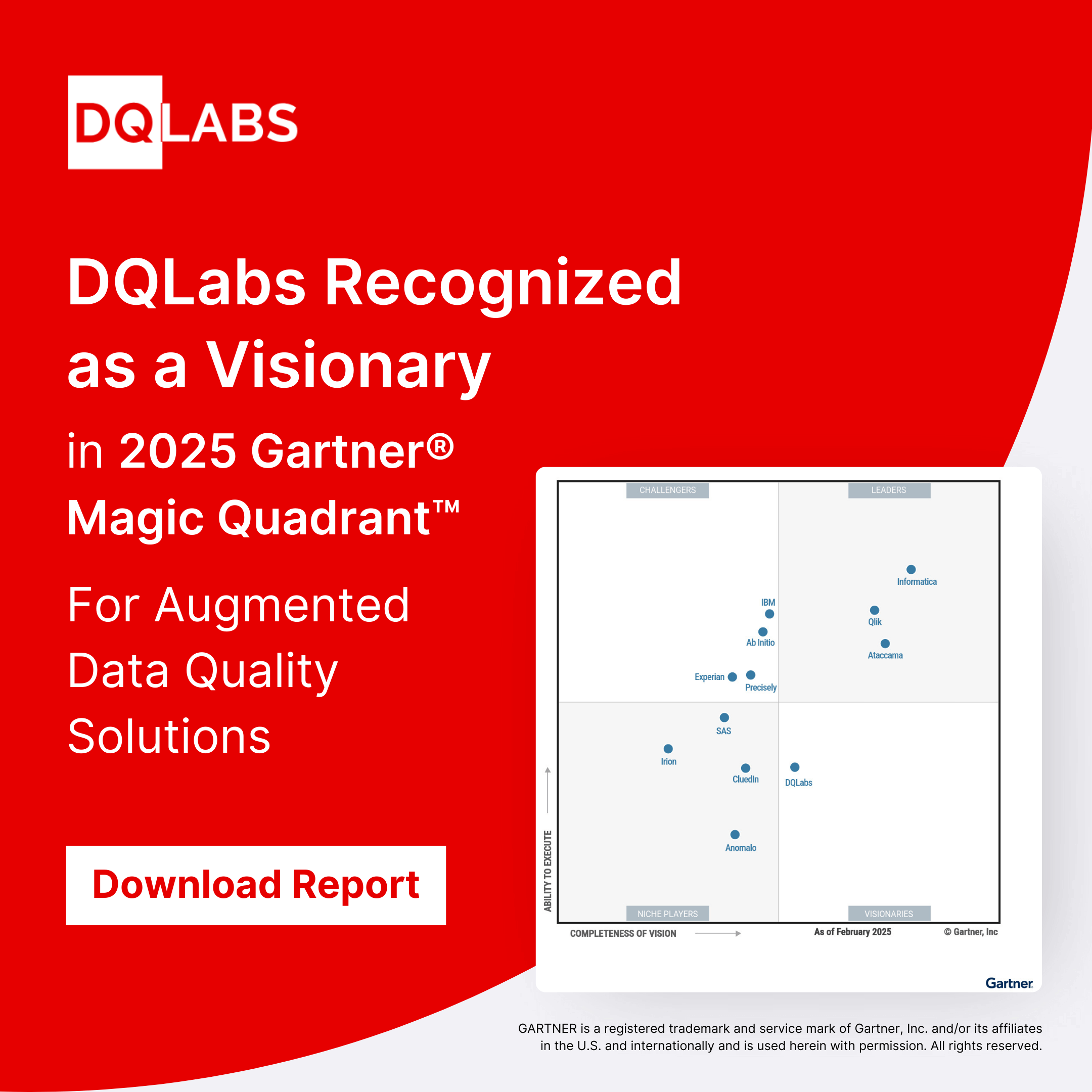

Having a robust ETL tool supported by a great scraper is crucial to any data aggregation project. But to ensure that the results meet your needs, you also need to make sure you have a quality check system in place. At DQLabs, we try to eliminate the traditional ETL approach and manage everything through a simple frontend interface by providing the data source access parameters. To learn more about how DQLabs uses AI and ML to manage data smarter and simplify these processes request a demo.